Why choose our services

OUR COMPANY FEATURES

Technology is our vision and future

Vision & Core Values

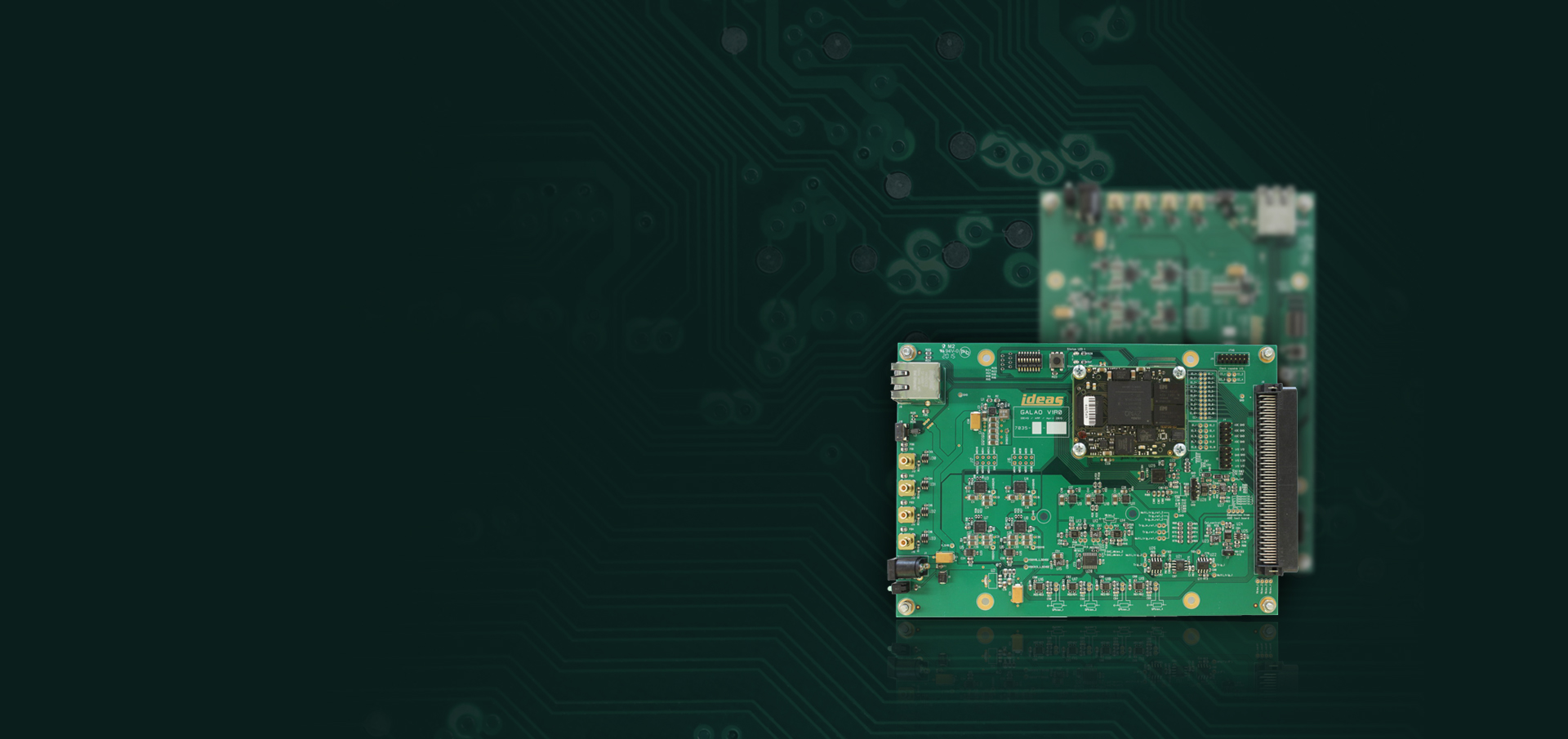

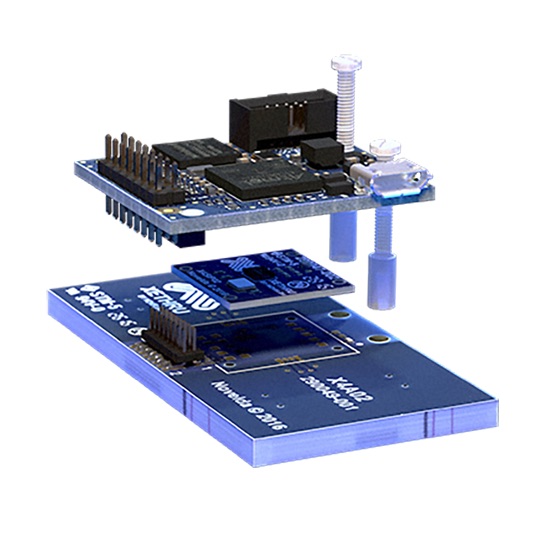

라온우리는 “즐거운 우리”라는 순 우리말로 저희와 함께 하는 모든 고객을 만족 시키기 위해 최선을 다하고자 하는 약속의 표현입니다. 아울러 개발자들에게 필요한 모든 것을 제공 해 드릴 수 있는 파트너로 함께하기 위해 계측장비, 초정밀 모션제어, 부품과 시스템 통합 등 기업에 필요한 모든 것을 공급 해 드리고자 항상 최선을 다하고 있습니다.

Learn more about Laonuri R & D

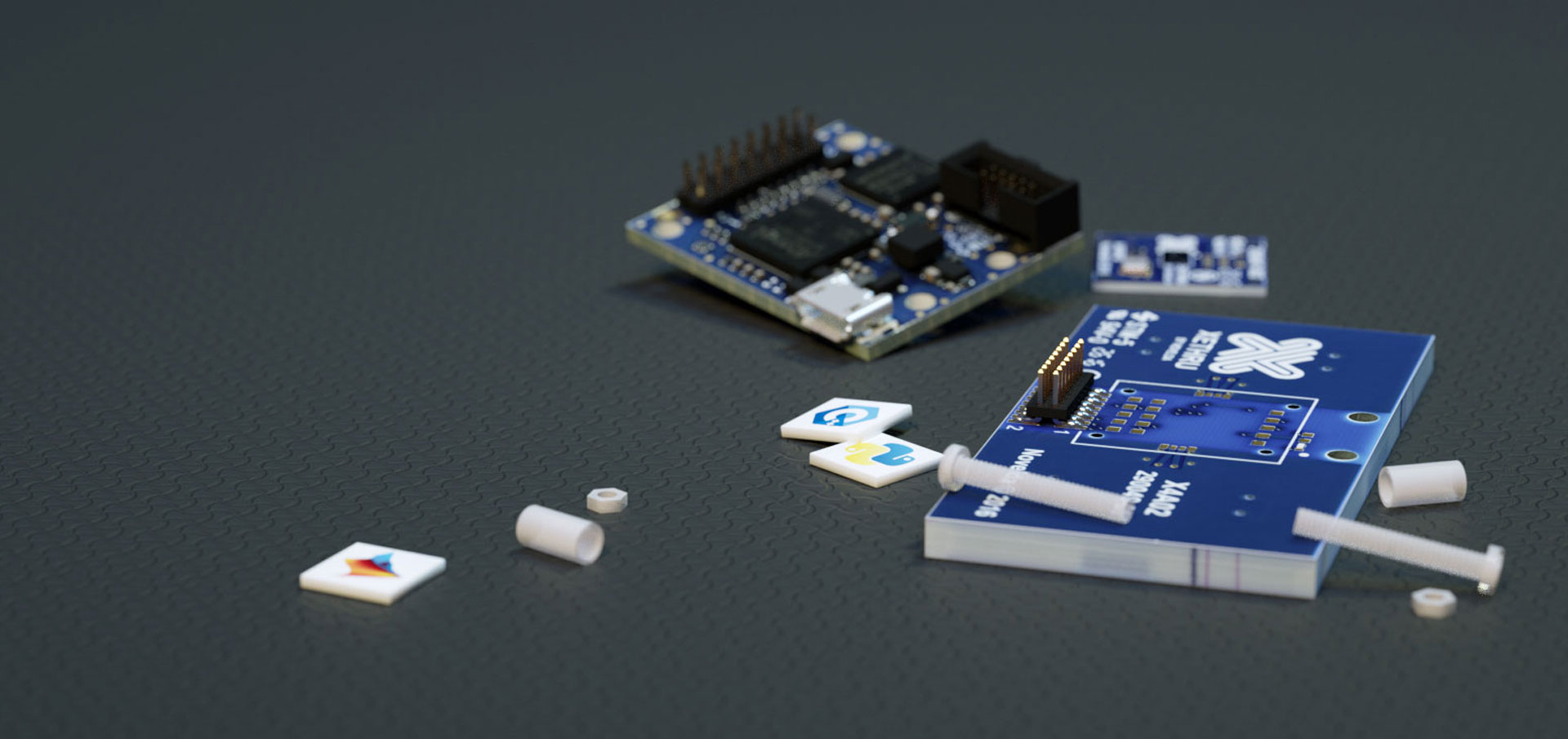

R & D Fields

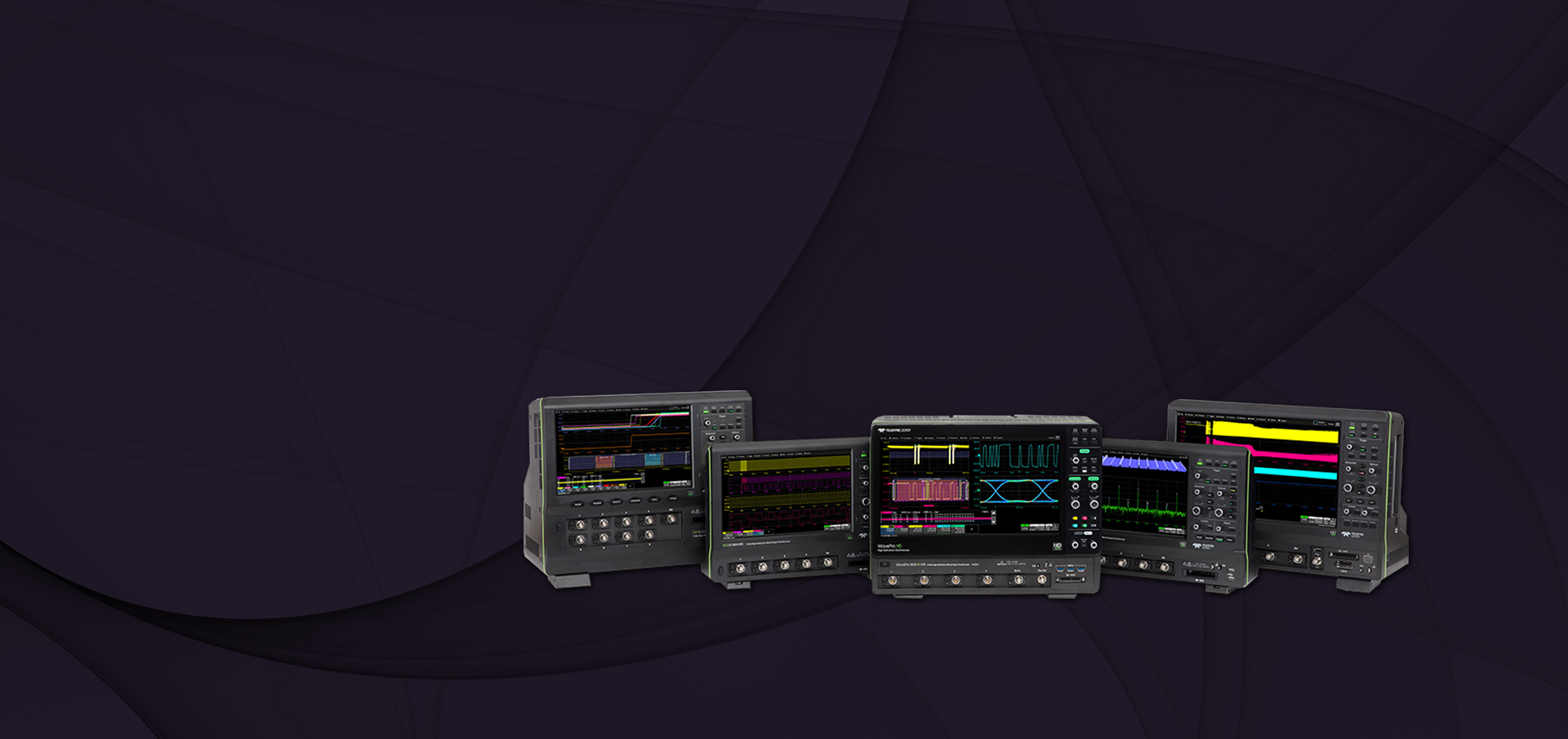

Meet our Partners